In the summer of 2023, as AI-powered tools became increasingly popular with the public release of ChatGPT, my co-founder Pirmin Steiner and I decided to explore its potential in the news media space and build our own AI tool.

Context

A recent study by Reuters highlighted a peak in news avoidance worldwide, which we believed was primarily influenced by the emotional tone of news headlines. We wanted to explore whether the news media was contributing to information fatigue through consistently negative headlines.

Our goal was to create a tool that would help journalists and writers understand the emotional impact of their headlines, potentially encouraging a more positive or balanced approach to news reporting.

Background

As a member of the sost collective, I took on the role of developing Spindoctor, an OpenAI GPT wrapper designed to analyze and categorize the sentiment of article headlines.

My responsibilities included creating the MVP, designing prompts for sentiment detection, and setting up a Firebase database to store queries and their corresponding AI responses. This data collection was intended to improve the model's accuracy over time.

Process

Our journey began with researching existing studies on news sentiment and its effects on reader engagement. We discovered a surprising insight: Both negative and positive headlines could significantly impact reader interest, challenging the common belief that negativity alone drives clicks. This insight led us to believe that news outlets could benefit from a tool that helps them craft more engaging, potentially positive headlines.

To test our hypothesis, we first evaluated the capabilities of OpenAI's GPT model for sentiment analysis. Unlike traditional sentiment analysis techniques that rely on word-level scoring, GPT's strength lay in its contextual understanding. I created a simple prompt designed to categorize a given headline as positive, neutral, or negative.

For a robust testing phase, I scraped over 1,000 headlines from Austrian news outlets such as Kleine Zeitung, Die Presse, and Kronen Zeitung via their publicly available RSS feeds. This dataset provided a diverse range of real-world examples for evaluation. To establish a baseline, both Pirmin and I manually categorized each headline into positive, neutral, or negative sentiment categories. This manual classification served as a benchmark to compare against the AI's performance.

Upon running our dataset through the GPT model, we found that the AI's sentiment classifications were remarkably consistent with our manual evaluations. Interestingly, the AI demonstrated a higher level of agreement with our combined human judgment than we did with each other, underscoring the model's potential reliability and objectivity.

Recognizing that journalists and writers would need more than just a sentiment label, I developed a supplementary prompt to generate a reasoning step. This provided a concise explanation of why the AI assigned a particular sentiment to a headline. The reasoning capability was critical for user trust, allowing journalists to understand the AI's thought process and potentially learn from its analysis.

Solution

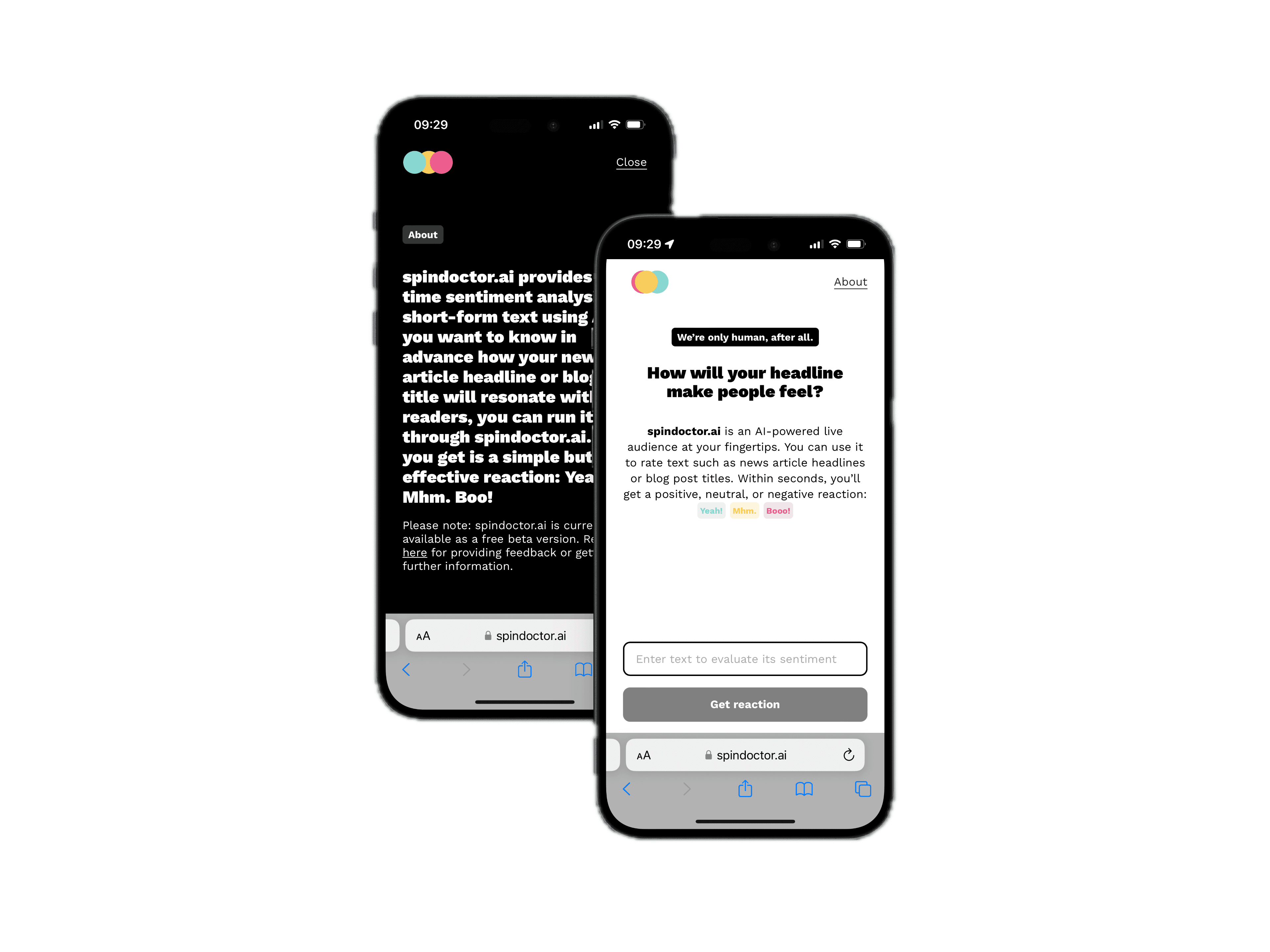

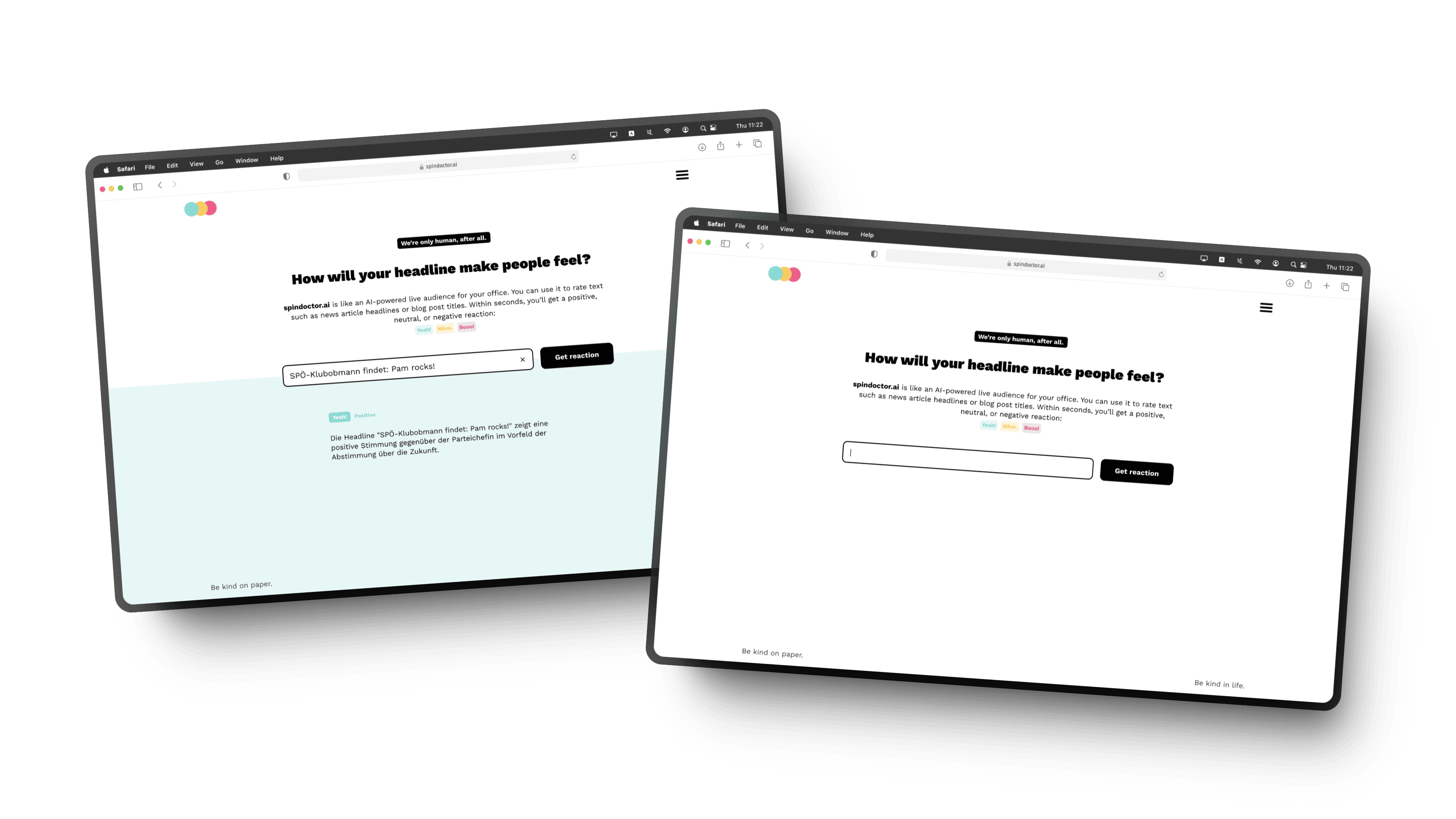

While refining the AI's capabilities, we developed the front-end of the application. I implemented a React-based interface, featuring a simple and intuitive design inspired by Google's minimalistic search aesthetic. The primary user interaction was centered around an input field where users could enter their headline.

The MVP for spindoctor attracted over 2000 headline checks in the course of a few days

Upon submission, the application would return a sentiment classification (positive, neutral, negative) along with a brief explanation, all delivered within seconds. This real-time feedback was designed to fit seamlessly into a journalist's workflow, providing immediate, actionable insights.

After building the MVP, we conducted a quick design sprint to refine the user experience. Pirmin focused on creating compelling copy and fine-tuning the UI to ensure clarity and ease of use.

We tested the application with a small group of journalists and friends in the industry, gathering feedback on both the accuracy of the AI's sentiment analysis and the usability of the interface. We used Firebase for data storage, capturing the headlines and AI responses to refine our prompts and improve accuracy.

Within a week, spindoctor was live, shared via our LinkedIn networks and among friends.

Impact

Within the first 24 hours of launch, Spindoctor attracted over 100 users who submitted more than 1,500 headlines for analysis.

The response exceeded our expectations, requiring an increase in our OpenAI API budget to accommodate the surge in usage. Feedback from users, including journalists, was overwhelmingly positive, with many appreciating the AI's nuanced reasoning behind its classifications. This feedback loop was invaluable, helping us fine-tune the prompts and improve the tool's reliability.

Reflection

Building Spindoctor was a thrilling venture into the AI space, particularly given the nascent state of the technology at the time. I learned firsthand the limitations and quirks of GPT, such as occasional inconsistencies in responses, which posed challenges for our backend systems. However, the rapid, iterative development process was invigorating and allowed us to quickly bring a functional product to market. The project also provided a solid foundation for future work with the OpenAI API, equipping me with the skills and confidence to tackle more complex AI-driven projects.

It was a valuable lesson in balancing speed and quality, navigating the constraints of a self-funded project, and leveraging AI's potential to create meaningful, impactful tools.